“I can’t help being a little glad that the telegraph companies have had this object lesson…Wireless is affected by certain things which do not hinder the ordinary lines, but in this matter we have the advantage” [Marconi, 1909]

When the Galaxy IV satellite ceased operating on May 19, 1997, millions of pager owners woke up the next day to discover that their high-tech devices had turned into useless pieces of plastic. When they got into their cars and tried to pump gas at the local gas station, the pumps rejected their credit cards because they were unable to use the satellite to transmit and receive verification codes. 100,000 privately-owned, satellite dish systems across North America had to be repointed at a cost of $100 each. The British Broadcasting Company’s news program on Houston’s KPFT radio station went silent, so the station turned to the Internet to gain access to the program instead. Today’s story was about criminals in Bombay who launder their money through the movie industry, and were prone to kill the Director if the movie bombed at the box office. Meanwhile, Data Transmission Network Corporation lost service to its 160,000 subscribers, costing the company over $6 million. Many newspapers and wire services noted that this was the day that the Muzak died, because the Galaxy IV also took with it the feed from the Seattle-based music service. Many who previously thought that ‘elevator music’ was annoying, realized just how much they actually missed hearing it for the first times in their lives. We can mostly survive these kinds of annoyances, but the impact of the satellite outage spread into other life-critical corners of our society as well. Hospitals had trouble paging their doctors on Wednesday morning for emergency calls. Potential organ recipients who had come to rely on this electronic signaling system to alert them to a life-saving operation, did not get paged. In the ensuing weeks, many newspapers including USA Today wrote cautionary stories about how we have become too reliant on satellites for critical tasks and services in our society. Even President Clinton ordered a complete evaluation of our vulnerability to high-tech incidents, some of which could be caused by terrorists.

Satellites represent an entirely unique technology that has grown up simultaneously with our understanding of the geospace environment. The first satellites ever launched, such as Explorer 1, were specifically designed to detect the space environment and measure it. Less than three years later, the first commercial satellite, Telstar 1, was pressed into service. There has never existed a time when we did not fully comprehend how space weather impacts satellite technology. Despite the nearly 40 years that have gone by since Telstar, Echo and Relay, satellite technology is, in many ways, still in its infancy.

The technologies of telegraph, telephone, power line systems and wireless communication went through short learning phases before becoming mature resources that millions of people could count on. History shows that the pace of this development was slow and methodical. The telephone was invented in 1871, but it took over 90 years before 100 million people were using them and expecting regular service. The growing radio communication industry had eight million listeners by 1910; 100 million by 1940. Satellite technology, on the other hand, took less than five years before it impacted 100 million people between the launch of Explorer 1 in 1958, and the Telstar satellite in 1963. This happened with a single two watt, receiver, on a 170-pound satellite. Even today, wireless cellular telephones have reached over 100 million consumers in less than five years since their introduction ca 1990. How many times a month do you ‘swipe’ your ATM card at the service station to pump gas? Retail cash verification systems are sweeping the country and all use satellites at some point in their validation process.

The International Telecommunications Union in Geneva predicted that between 1996 to 2005, the demand for voice and data transmission services will increase from $700 billion to $1.2 trillion. The fraction carried by satellite services will reach a staggering $80 billion. Thomas Watts, vice president of Merrill Lynch’s US Fundamental Equity Research conducted a study predicting $171 billion per year by 2007 in global satellite revenues. To meet this demand, many commercial companies are launching aggressive networks of Low Earth Orbit satellites; the new frontier in satellite communications.

In the eyes of the satellite community, we live in a neo-Aristotelian universe. The sub-lunar realm, as the ancients used to call it, is sectioned into several distinct arenas, each with its own technological challenges and opportunities. Most manned activities involving the Space Shuttle and Space Station take place in orbits from 200 to 500 miles. Pound-for-pound, this is the least expensive environment in which to place a satellite, but it is also useless because of the limited ground view provided so that more satellites are needed to cover the Earth.

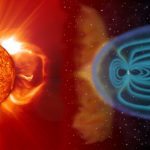

Then we have Low Earth Orbit (LEO) which spans a zone approximately from 400 to 1,500 miles above the surface. LEO is the current darling of the satellite industry because, from these orbits, the round trip time radio signal delays are only about 0.01 seconds compared to the 0.20 seconds delay from geosynchronous orbit. But there are, of course, several liabilities with such low orbits. The biggest of these is the atmosphere of the Earth itself, and the way it inflates during solar storm events. This causes high-drag conditions that lower satellite orbits by tens of miles at a time. In addition, the most intense regions of the van Allen radiation belts reach down to 400 miles over South America. Satellites in LEO will spend a significant part of their orbital periods flying through these clouds of SEU-generating particles. There is also the fact that, while the GEO satellite delays are long, after a signal goes through several links in a LEO satellite network, the original 0.01 second ‘latency’ delay can be significantly lengthened to nearly GEO time lags. Many industry analysts have used this to argue that LEO orbits may not be that much of an advantage for some applications.

Between 6,000 and 12,000 miles, we enter Mid Earth Orbit (MEO) space, which was originally used by the Telstar 1 satellite, but is currently not economically worth the incremental advantage it provides for communication satellites. ICO Global Communications, however, will be setting up a MEO network of 12 satellites costing $4.5 billion at 10,350 km to provide a global telephone service for an anticipated 14 million subscribers soon after its completion in 2000.

Finally at 22,300 miles above the Earth’s surface we enter the so-called Clarke Belt of geostationary orbits (GEO). Syncom 1 was the first communications satellite boosted into this orbit back in 1964, and with only three satellites you can cover most of the world in a seamless communications network. The problem is that it is nearly a factor of 10 times more expensive per pound to place a satellite at such high altitudes compared to LEO and MEO. There are currently over 800 satellites in GEO serving the global needs of commercial and military communications and reconnaissance objectives. In the next few years, Loral Space and Communication’s three-satellite Cyberstar system will be added to this mix for $1.6 billion.

Motorola’s $5 billion Iridium network now consists of 72 satellites including four spares, was the first large network to take the LEO stage in 1998. By 1999, Orbital Sciences had also completed a 36-satellite LEO network called Orbcomm. Then came Globalstar Communication’s 52-satellite network orbiting at 876 miles, completed in December, 1999 was built at a cost of $3.5 billion. In the near future, LEO will continue to become ever more crowded with new players offering different services. The Ellipso satellite network will be a 12-satellite LEO system for Mobile Communications Holdings Inc. to be built for $1.4 billion and placed in service in the year 2000. Alcatel’s Skybridge will cost $4.2 billion and consist of 64 satellites, with service beginning in 2001-2002. It is expected to have over 400 million subscribers by 2005. Hughes Electronics will put up SpaceWay for a price of $4.3 billion and be ready for users by 2002. Alcatel plans to launch a network of 63 LEO satellites for civil navigation beginning in 2003. Their Global Navigation Satellite System-2 will be operational by 2004 in a joint, international partnership with European and non-European partners. The cost is about $636 million and the network will provide 5-10 meter position accuracy compared to the military GPS system that provides 100-meter accuracy for non-classified use. Telespazio of Italy’ Astrolink system of nine-satellites will soon join Loral’s Cyberstar network in GEO orbit.

The most amazing and audacious of these ‘Big LEO’ systems is Microsoft’s Teledesic system. For $9 billion, 288 satellites will be placed in LEO and provide 64 megabyte data lines for an ‘Internet in the Sky’. Motorola will build another spacecraft manufacturing facility to support the production of these satellites, and to capitalize on its experience with the Iridium satellite production process. To make Teledesic a reality requires a manufacturing schedule of four satellites per week to meet the network availability goals for 2003. These small but sophisticated 2,000 kilogram satellites will have electric propulsion, laser communication links, and silicon solar panels.

The total cost of these systems alone represents a hardware investment by the commercial satellite industry of over $35 billion between 1998 and 2004. No one really knows just how vulnerable these complex systems will be to solar storms and flares. Based on past experiences, satellite problems when the do appear will probably vary in a complex way depending on the kind of technology they use, and their deployment in space. What is apparent from the public description of these networks is that the satellites being planned share some disturbing characteristics: They are all light-weight, sophisticated, and built at the lowest cost following only a handful of design types replicated dozens and even hundreds of times.

Beyond the commercial pressure to venture into the LEO arena, there is the financial pressure to do so at the lowest possible cost. This has resulted in a new outlook on satellite manufacturing techniques that are quite distinct from the strategies used decades ago. According W. David Thompson, President of Spectrum Astro,

“The game has changed from who has 2 million square feet and 500 employees, to who can screw it together fastest”.

Satellite systems that once cost $300 million, now cost $50 million. Space hardware, tools and test equipment are now so plentiful that 80% of a satellite can be purchased and the rest can be built in-house as needed. The race to meet an ad-hoc schedule set by industry competition to ‘be there first’ has led to some rather amazing tradeoffs which would have shocked older space engineers. In the 1960s – 1980’s, you did all you could to make certain that individual satellites were as robust as possible. Satellites were expensive and one-of-a-kind. Today, a very different mentality has grown up in the industry. For instance, Peter de Selding a reporter for Space News, described how Motorola officials had apparently known by at least 1998 that to keep to their launch schedule they would have to put up with in-orbit failures. Motorola was expecting to loose six Iridium satellites per year even before the first one was launched. At the ‘Fourth International Symposium on Small Satellite Systems’ on September 16, 1998 Motorola officials said that the company would launch 79 Iridium satellites in just over two years by scrapping launch-site procedures that conventional satellite owners swear by. “Schedule is everything…the product we deliver is not a satellite, it is a constellation” says Suzy Dippe, Motorola’s senior launch operations manager. A 10% satellite failure rate would be tolerated if it meant keeping to a launch schedule. Of course, it is the satellite insurance industry that takes the biggest loss in this failure, at least over the short term.

Satellite insurers are now getting worried that satellite manufacturers may be cutting too many corners in satellite design. The sparring between insurers and manufacturers has become increasingly vocal since 1997. During that year (the first year after sunspot minimum between cycles 22 and 23), insurance companies paid out $300 million in claims, prompting Benito Pagnanel, deputy general manager at Assicurazioni Generali S.p.A to lament that,

“The number of anomalies in satellites appear to be constantly on the rise….because pre-launch tests and in-flight qualification of new satellite components have been cut down to reduce costs and sharpen a competitive edge”.

But Jack Juraco, senior vice president of commercial programs at Hughes Space and Communications, disagreed rather strenuously with the idea that pre-launch quality was being short-changed.

“The number of failures has not gone up as a percent of the total…What has happened is that we have more satellites being launched than previously. The total number of anomalies will go up even if the rate of failure is not increasing”.

Per Englesson, deputy manager of Skandia International Stockholm, a satellite insurance company, was rather less impressed by industry’s claims that quality was not a part of the problem bedeviling the satellite industry; “anomalies aboard orbiting satellites have reached unprecedented proportions”. He suspected that one reason for the on-board failures is the fact that relatively small organizations are buying satellites but not hiring the technical expertise needed to oversee their construction. He criticized satellite owners who refuse to get involved in technical evaluations of hardware reliability, instead leaving all such issues for insurers to figure out.

Despite a miserable 1998 which cost them over $600 million in in-orbit satellite payouts, insurance companies still regard the risks of in-orbit failures as a manageable problem. Launch services and space insurance markets generated $8 billion in 1997 and $10 billion in 1998. Since private insurers entered the space business in the 1960’s, they have collected $4.2 billion in premiums and paid out $3.4 billion in claims. Insurers consider today’s conditions a buyers market with $1.2 billion capacity for each $200 million satellite. There is a lot of capacity available to cover risk needs.

Like any insurance policy the average home owner tries to get, you have to deal with a broker and negotiate a package of coverages. In low risk areas, you pay a low annual premium, but you can pay higher premiums if you are a poor driver, live on an earthquake fault, or own beach property subject to hurricane flooding. In the satellite business, just about every aspect of manufacturing, launching and operating a satellite can be insured, at rates that depend on the level of riskiness. Typically for a given satellite, 10-15 large insurers (called underwriters) and 20-30 smaller ones may participate. There are about 13 international insurance underwriters that provide about 75% or so of the total annual capacity. Typically, the satellite insurance premiums are from 8-15% for risks associated with the launch itself. In-orbit policies tend to be about 1.2 to 1.5% per year for a planned 10-15 year life span once a satellite survives its shake-out period. If a satellite experiences environmental or technological problems in orbit during the initial shake-out period, the insurance premium paid by the satellite owner can jump to 3.5 – 3.7% for the duration of the satellite’s lifetime. This is the only avenue that insurers have currently agreed upon to protect themselves against the possibility of a complete satellite failure. Once an insurance policy is negotiated, the only way that an insurer can avoid paying out on the full cost of the satellite is in the event of war, a nuclear detonation, confiscation, electromagnetic interference or willful acts by the satellite owner that jeopardize the satellite. There is no provision for ‘Acts of God’ such as solar storms or other environmental problems. Insurers assume that if a satellite is sensitive to space weather effects, this will show up in the reliability of the satellite, which would then cause the insurer to invoke the higher premium rates during the remaining life of the satellite. Insurers, currently, do not pay any attention to the solar cycle, but only assess risk based on the past history of the satellite’s technology.

As you can well imagine, the relationship between underwriters and the satellite industry is both complicated and at times volatile. Most of the time it can be characterized as cooperative because of the mutual interdependencies between underwriters and satellite owners. During bad years, like 1998, underwriters can lose their hats and make hardly any profit from this calculated risk-taking. Over the long term, however, satellite insurance can be a stable source of revenue and profit, especially when the portion of their risk due to launch mishaps is factored out of the equation. As the Cox Report notes about all of this,

“The satellite owner has every incentive to place the satellite in orbit and make it operational because obtaining an insurance settlement in the event of a loss does not help the owner continue to operate its telecommunications business in the future. To increase the client’s motivation to complete the project successfully, underwriters will also ask the client to retail a percentage [typically 20%] of the risk” [Cox Report, 1999]

According to Philippe-Alain Duflot, Director of the Commercial Division of AGF France,

“…the main space insurance players have built up long-term relations of trust with the main space industry players, which is to say the launch service providers, satellite manufacturers and operators. And these sustained relations are not going to be called into question on the account of a accident or series of unfortunate incidents”.

Still, there are disputes that emerge which are now leading to significant changes in this relationship. Satellite owners, for instance, sometimes claim a complete loss on a satellite after it reaches orbit, even if a sizable fraction of its operating capacity remains intact after a ‘glitch’. According to Peter D. Nesgos of the New York law firm Winthrop, Stimson, Putnam and Roberts as quoted by Space News,

“In more than a dozen recent cases, anomalies have occurred on satellites whose operators say they can no longer fulfill their business plans, even though part of the satellite’s capacity can still be used”

This has caused insurance brokers to rethink how they write their policies, and for insurance underwriters to insist on provisions for partial salvage of the satellite. In 1995, the Koreasat-1 telecommunications satellite owned by Korea Telecom of South Korea triggered just such a dispute. In a more recent dispute underwriters actually sued a satellite manufacturer Spar Aerospace of Mississauga, Canada over the AMSC-1 satellite, demanding a full reimbursment of $135 million. They allege that the manufacturer ‘covered up test data that showed a Spar-built component was defective’. Some insurers are beginning to balk at vague language which seemingly gives satellite owners a blank check to force underwriters to insure just about anything the owners wish to insist on.

One obvious reason why satellite owners are openly adverse to admitting that space weather is a factor, is that it can jeopardize reliability estimates for their technology, and thus impact the negotiation between owner and underwriter. If the underwriter deems your satellite poorly designed to mitigate against radiation damage or other impulsive space weather events, they may elect to levy a higher premium rate during the in-orbit phase of the policy. They may also offer you a ‘launch plus five year’ rather than a ‘launch plus one year’ shakeout period. This issue is becoming a volatile one. A growing number of stories in the trade journals since 1997 report that insurance companies are growing increasingly vexed by what they see as a decline in manufacturing techniques and quality control. In a rush to make satellites lighter and more sophisticated, owners such as Iridium LLC are willing to loose six satellites per year. What usually isn’t mentioned is that they also request payment from their satellite insurance policy on these losses, and the underwriters then have to pay out tens of millions of dollars per satellite. In essence, the underwriter is forced to pay the owner for using risky satellite designs, even though this works against the whole idea of an underwriter charging higher rates for known risk factors. Of course, when the terms of the policy are negotiated, underwriters are fully aware of this planned risk and failure rate, but are willing to accept this risk in order to profit from the other less risky elements of the agreement. It is hard to turn-down a five year policy on a $4 billion network that will only cost them a few hundred million in eventual payouts. The fact is that insurers will insure just about anything that commercial satellite owners can put in orbit, so long as the owners are willing to pay the higher premiums. Space weather enters the equation because, at least publicaly, it is a wild card that underwriters have not fully taken into consideration. They seemingly charge the same in-orbit rates (1.2 to 3.7%) regardless of which portion of the solar cycle we are in.

It used to be that satellite components, like grapes for a wine, were hand-selected from only the finest and best parts. The term ‘mil-spec’ (Military Specifications) represented components designed of the highest quality and in most cases, considerable radiation tolerance. Not anymore. One of the most serious problems that seem to come up again and again is the issue of ‘off-the-shelf’ electronics. They are readily available, cheap, and are an irresistable lure for satellite manufacturers working within fixed or diminishing budgets. This is frequently touted as good news for consumers because the cost-per-satellite becomes very low when items can be mass-produced rather than built one at a time.

A satellite network that expects to keep costs down by using off-the-shelf electronics is the Teledesic system. But they are already off to what appears to be a rocky start. Reporter Keith Stein for the journal International Space Industry Report for May 7, 1998 describes how the Teledesic 1 (T1) experimental satellite is no longer operating as planned. Its purpose was to conduct a series of communications tests on three channels between 18 – 450 MHz to demonstrate the high data rate capabilities of the telemetry that will be used with the full network of 288 satellites. No details were given, either to the cause of the malfunction, the systems involved, or the time when the satellite failed. Meanwhile, Motorola will complete the manufacture of these satellites in a whirlwind 14 months, just as soon as they get the green light to start production. Celso Azevedo, President and CEO of the Lockheed Marten-supported Astrolink notes in an interview with Satellite Communications magazine that,

“You have to minimize your technological risks. GEO architecture is proven. When utilizing new technology, developers have a tendency to go too far and stretch the envelope, which is what Teledesic is doing. The project is unlaunchable, unfinancable and unbuildable”

The number of basic satellite designs also continues to fall as mass production floods geospace with numerically smaller diversity of satellites based on similar designs and assumptions about space weather hazards. These designs are manufactured by Lockheed-Martin, Hughes and Motorola. For example, there are currently 40 HS-601 satellites of the same model as Galaxy IV in operation, and these include PanAmSat’s Galaxy-7 and DirecTV’s DBS-1 satellites which also experienced primary control processor failures. Motorola’s Iridium satellite network lost seven of its identical satellites by August 1998. According to Alden Richards, CEO of Greenwich, Connecticut risk management firm, “These problems are not insignificant. Insurers are clearly concerned that there have been these anomalies”

More and more often, satellite insurance companies are finding themselves in the position of paying-out claims, but not for the very familiar risk of launching the satellite with a particular rocket. In the past, the biggest liability was in launch vehicle failures, not in satellite technology. As more satellites have been placed in orbit successfully, a new body of insurance claims has also grown at an unexpected rate. According to Jeffrey Cassidy, senior vice president of the aerospace division of A.C.E. Insurance Company Ltd., as many as 11 satellites during 1996 have had insured losses during their first year of operation. The identities of these satellites, however, were not divulged nor even the names of their owners.

According to Space News, satellite insurance companies are reeling from the huge pay-outs of insurance claims that totaled $750 million during the first half of 1998 alone. Most of these claims were for rocket explosions on launch of the Galaxy 10 satellite, which cost $250 million. The remaining claims, however, included on-orbit failures of the Galaxy 4 ($200 million) and seven of the Iridium satellites. By 1999 a new trend in the insurance pay-outs had begun to emerge. “Satellite Failures put Big Squeeze on Underwriters”, read an article by Peter de Selding in Space News.

“[1998] will go down in space-insurance logbooks as the most costly in history…one notable trend in 1998 was the fact that failures of satellites already in orbit accounted for more losses than those stemming from rocket failures.”

Despite the rough times that both manufacturers and insurers seem to be having, they are both grimly determined to continue their investments. Assicurazioni Generali, S.p.A of Triests, the biggest underwriter has no plans to reduce its participation in space coverage, but at the same time thinks very poorly of the satellite manufacturing process itself. Giovanni Gobbo, Assicurazioni’s space department manager, is quoted as saying “I would not buy a household appliance that had as many reliability problems as today’s satellites”. The biggest pay out in 1998 was for $254 million for 12 satellites in the Iridium program; five were destroyed at launch. Despite all the dramatic failures, the satellite insurance companies have actually lowered their insurance rates for launches from 15-16% in 1996 to 12-13% in 1997. Meanwhile, in-orbit insurance rates, the kind affected by space weather problems, have remained at 1-2% per year of the total replacement cost. Industry insiders do not expect this pricing to remain so inexpensive. With more satellite failures expected in the next few years, these rates may increase dramatically.

When satellites fail, another turn of affairs also seems to be true more often than not. The lessons learned from satellite malfunctions beginning with Telstar 1 seem now to have been publicaly lost from the discussions of cause-and-effect. We have entered a new age when ‘mysterious’ satellite anomalies have suddenly bloomed as if from out of nowhere. The default explanation for satellite problems has moved away from public discussions of sensitive technology in a hostile environment, to guarded post-mortems that point the finger to insurable causes.

The nearly $600 million in in-orbit satellite failures that insurance companies have had to pay on in 1998 alone, has prompted questions of whether spacecraft builders are cutting costs in some important way to increase profit margins especially with the number of satellite anomalies continuing to rise. Between 1995 and 1997, insurance companies paid out 38% of the $900 million in claims, just for on-orbit satellite difficulties. Since the early 1980’s, satellite failure claims have doubled in number, from $200 to $400 million annually. The satellite manufacturers argue that compared to the number of satellites launched and functioning normally, the percentage of anomalies and failures has remained nearly the same over the last two decades. Hughes Space and Communications, for example, has 67 satellites and there has been no percentage change in the failure rate. They use this to support the idea that the problems with satellite failures are inherent to the technology, not the satellite environment that changes with the solar cycle. According to Michael Houterman, president of Hughes Space and Communications International, Inc of Los Angeles, the spate of failures in the HS-601 satellites is a result of ‘design defects’ not of production-schedule pressure or poor workmanship:

“Most of our quality problems can be traced back to component design defects. We need, and are working toward, more discipline in our design process so that we can ensure higher rates [of reliability]”.

Satellite analyst Timothy Logue at the Washington law firm of Coudert Brothers begs to differ:

“The commercial satellite manufacturing industry went to a better, faster, cheaper

approach, and it looks like reliability has suffered a bit, at least in the short term”.

Curiously absent from virtually every communications satellite report of a problem, is the simple acknowledgment that space is not a beniegn environment for satellites. The bottom line in all of this is that communications technology has expanded its beachhead in near-earth space to include thousands of satellites. These complex systems seem to be remarkably robust, although for many of them that may be in the wrong place at the wrong time, their failure in orbit can be tied to solar storm events. The data, however, is sparse and circumstantial because we can never retrieve the satellites to determine what actually affected them. Satellite manufacturers often look for technological problems to explain why satellites fail, while scientists look at the spacecraft’s environment in space to find triggering events. What seems to be frustrating to the satellite manufacturing industry is that, when in-orbit malfunctions occur, each one seems to be unique. The manufacturers can find no obvious pattern to them. Like a tornado entering a trailer park, when space weather effects present themselves in complex ways across a trillion cubic miles of space, some satellites can be affected while others remain intact.

Currently we can only speculate that ‘Storm A killed satellite B’ or that ‘A bad switch design was at fault’. Since there is no free flow of information between industry and scientists, and the satellites can’t be recovered, the search for a ‘true cause’ remains a maddeningly illusive goal. But the playing field is not exactly level when it comes to scientists and industries searching for answers. This usually works to the direct benefit of the satellite owners.

There is a tremendous incentive built into the industrial investigative process, to explain satellite failures as non-storm events. On the other hand, the scientific position is that we really, truly, don’t know for certain why specific satellites fail no matter how much circumstantial evidence we accumulate. This is especially true when commercial and military satellite owners refuse to tell you the details of how their satellites were affected. The Federal Aviation Authority, without the proverbial ‘black box’ would have an awful time recreating the details of plane crashes under similar circumstances. Uncertainty is what science is all about and this, unfortunately, also plays into the hands of the satellite industry in their efforts to find non-solar explanations for every satellite malfunction. Part of the reason for this uncertainty is that simultaneous events aren’t always related to each other in terms of cause-and-effect. Sometimes, complex technology does, simply, stop working on its own.

You might recall that at one point it looked very convincing that the Exxon Valdez may have had unseen navigation problems caused by a solar storm then in progress. The only problem is that the navigation aids in use on the Exxon Valdez are rather immune from magnetic storms, so the plausible story that a magnetic storm caused the Exxon Valdez to be on the wrong course is, itself, quite wrong. This is why it seems to be very hard to tie specific satellite failures to solar storm events, even though from the available circumstantial evidence, it looks like a sure-bet. You can’t recover the satellite to autopsy it and confirm what really happened.

If there are thousands of working satellites in space, why is it that a specific storm seems to affect only a few of them? If solar storms are so potent, why don’t they take-out many satellites at a time? Solar storms are at least as complex as tornadoes. We know that tornadoes can destroy one house and leave its neighbors unscathed, but this doesn’t force us to believe that tornadoes are not involved in the specific damage we see. The problem with solar storms is that they are nearly invisible. We hardly see them coming, and the data to determine specific cause-and-effect relationships is usually incomplete, classified, scattered among hundreds of different institutions, or anecdotal. For this reason, any scientist attempting to correlate a satellite outage or ‘anomaly’ with the outcome of a particular solar storm fights something of an up-hill battle. There is usually only circumstantial evidence available, and the details of the satellite design and functions up to the moment of blackout are shrouded in secrecy.

Commercial satellite companies, meanwhile, would prefer that this subject not be brought into the light for fear of compromising their fragile competitive edges in a highly competitive market. In a volatile industry driven by stock values and quarterly profits, no company wants to tell about their anomalies, or make their data public for scientists to study. Iridium’s stock took a major stumble during the summer of 1998 in the aftermath of seven satellite outages, as investors got cold feet over the technology. The company went bankrupt in 1999.

The first satellite to fall victim to space weather effects was, in fact, the one of the first commercial satellites ever launched into orbit in July 1962: Telstar 1. In November of that year, it suddenly ceased to operate. From the data returned by the satellite, Bell Telephone Laboratory engineers on the ground tested a working twin to Telstar by subjecting it to artificial radiation sources, and were able to get it to fail in the same way. The problem was traced to a single transistor in the satellites command decoder. Excess charge had accumulated at one of the gates of the transistor, and the remedy was to simply turn of the satellite for a few seconds so the charge could dissipate. This, in fact, did work, and the satellite was brought back into operation in January, 1963. The source of this information was not some obscure technical report, or an anecdote casually dropped in a conversation. This example of energetic particles in space causing a satellite outage was so uncontroversial at that time, it appeared under the heading ‘Telstar’ in the 1963 edition of the World Book Encyclopedia’s 1963 Yearbook.

Recast in today’s polarized atmosphere, the outcome would have been very different. The satellite owner would have declared the failure a technological problem with the quality of one of its transistors, and immediately filed an insurance claim to recover the cost of the satellite. The scientists, meanwhile, would have suspected that it was, instead, a space weather event which had charged the satellite. These findings would be published in obscure journals and trade magazines, or viewgraphs used in technical or scientific presentations. An artificial public ‘mystery’ would have been generated, adding to a growing sense of artificial confusion about why satellites fail in orbit. Despite our increased understanding of space weather effects, more satellites seem to mysteriously succumb to outages while in service. It’s as if we are having to learn, all over again, that space is fundamentally a hostile environment, even when it looks benign on the basis of sparse scientific data.

Of course, satellite owners and electrical utility managers are unwilling, or in some cases unable, to itemize every system anomaly, just as the major car manufacturers are not about to publicly list all of the known defects in their products. On the other hand, industry has quickly accepted the fact that it is cheaper to admit to the rare, but significant, life-threatening problems and voluntarily recall a product, than to wait for a crushing class-action law suit. Rarely do commercial satellite owners give specific dates and times for their outages, and in the case of Iridium, even the specific satellite designations are suppressed, as is any public discussion about the causes of the outages themselves. If this is to be the wave of the future in commercial satellite reportage, especially from the Big LEO networks, we are in for a protracted period of confusion about causes and effects. Anecdotal information provided by confidential sources will be our only, albeit imperfect, portal into what is going on in the commercial satellite industry. Without specific dates and reasons for failure, scientists cannot then work through the research data and identify plausible space weather effects, or show that they were irrelevant. This also means that the open investigation into why satellites fail, which could lead to improvements in satellite design and improved consumer satisfaction with satellite services, is all but ended. As Robert Sheldon notes,

“…the official AT&T failure report [about the Telstar 401] as presented by Dr. Lou Lanzerotti at the Spring AGU Meeting denied all space weather influence and instead listed three possible [technological] mechanisms…This denial of space weather influence at this meeting was met with a murmuring wave of disbelief from the audience who no doubt had vested interests in space weather”.

For years, Joseph Allen and Daniel Wilkinson at NOAA’s Space Environment Center kept a master file of reported satellite anomalies from commercial and military sources. The collection included well over 9000 incidents reported up until the 1990’s. This voluntary flow of information dried-up rather suddenly in 1998 as one satellite owner after another stopped providing these reports. From now on, access to information about satellite problems during Cycle 23 would be nearly impossible to obtain for scientific research. More than ever, examples of satellite problems would have to come from the occasional reports in the open trade literature, and these would only cover the most severe, and infrequent, full outages. There would be no easy record of the far more numerous daily and weekly mishaps, which had been the pattern implied by the frequency of these anomalies in the past.

Meanwhile, the satellite industry seems emboldened by what appears on the surface to be a good record in surviving most solar storm events during the last decade. With billions of dollars of potential revenue to be harvested in the next 5-10 years, we will not see an end to the present face-offs between owners, insurers and scientists. For the consumer and user of the new satellite-based products; Caveat emptor. The next outage may, however, come as suddenly as a power blackout and find you as ill-prepared to weather its consequences. In the end, solar storms may seek you out in unexpected places and occasions, and touch you electronically through your pagers, cellular phones and Internet connections. All of this from across 93 million miles of space.

More From SolarStorms.org:

Submit your review | |